Setting Up GCP & GA4

Guide to Google Setup with Kleene App

To ingest data from Google products into your Snowflake or Redshift data warehouse via the Kleene app, the data must be transferred into BigQuery (Google’s data warehouse) first. The setup for this is done within Google Cloud Platform (GCP) which is the home for all of Google’s cloud products.

The exceptions to this are:

- Google Sheets: Google Sheets can be ingested directly using Kleene’s Google Sheets OAuth connector.

- Universal Analytics (UA): The Universal Analytics connector in the Kleene app enables you to pull sampled UA data for each view within a UA container. This is limited to pulling data for the previous day only, so a historic backfill is not possible. You can find setup instructions here. The data is heavily aggregated and is limited by the granularity of the view it is pulling from. We strongly recommend migrating to GA4 as soon as you can as the data is far more useful and UA will be deprecated from 1st July 2023.

This document will guide you through the GCP setup process and the subsequent steps required to land data from UA, GA4 and Ads in your data warehouse.

Prerequisites:

- You will need a Google account to access Google Cloud Platform (GCP)

Setting up GCP:

- Login to your Google account and access GCP here: console.cloud.google.com. Start your free GCP trial and then add billing details to the billing section. Failing to add billing details before the end of the free trial will cause you to be cut off and the following setup will have to be redone. We therefore recommend adding billing details as soon as you start the trial.

- Create an organisation for your business within your account. Step by step instructions for this can be found here.

- Once you have created an organisation, you need to create a project and name it: <your_org_name>_kleene. Step by step instructions can be found here.

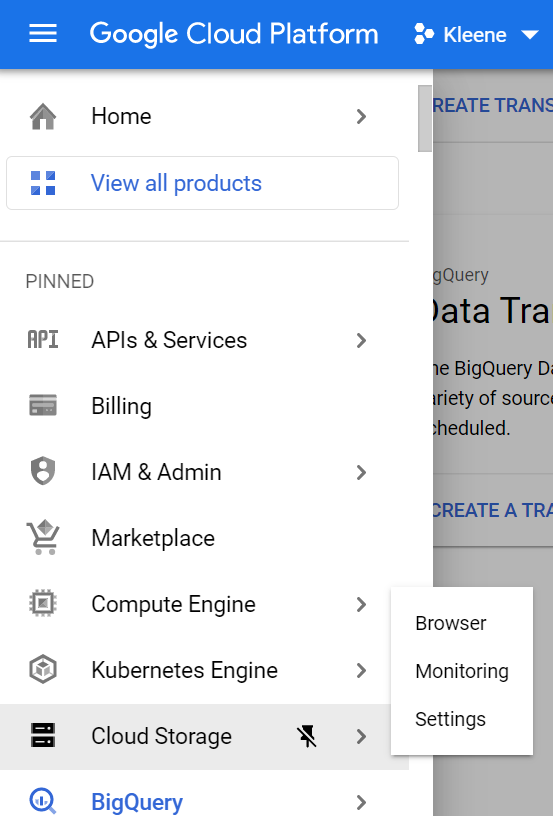

- Search for all required APIs and enable them within GCP. For Google Analytics, enable Google Analytics Reporting API. The ones you will most likely to require are:

- BigQuery Data Transfer API

- Google Analytics Reporting API

- Google Ads API

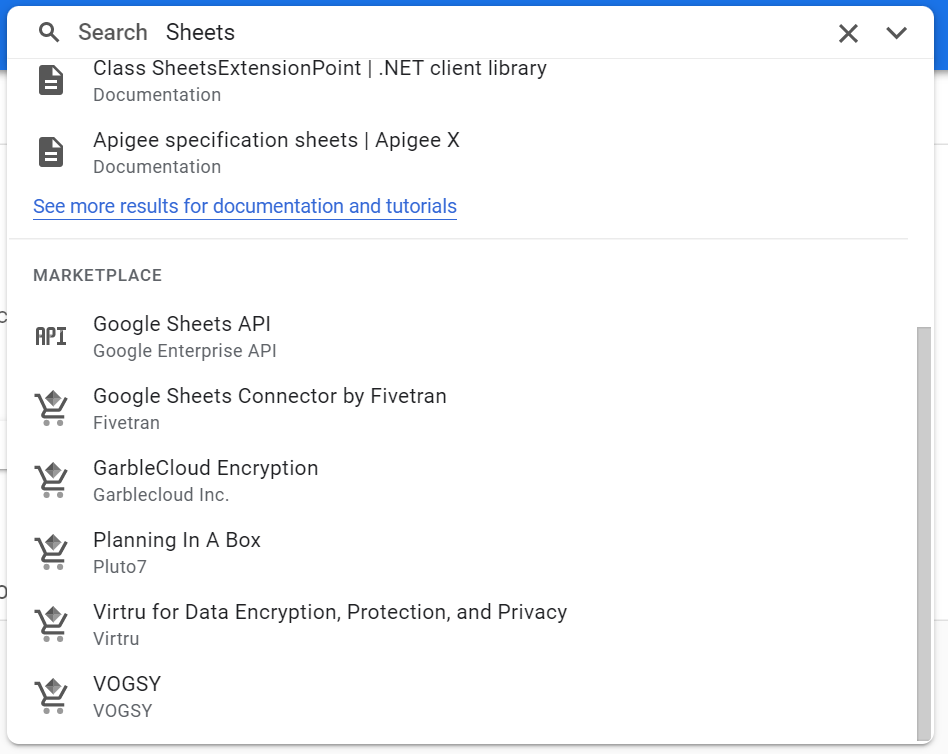

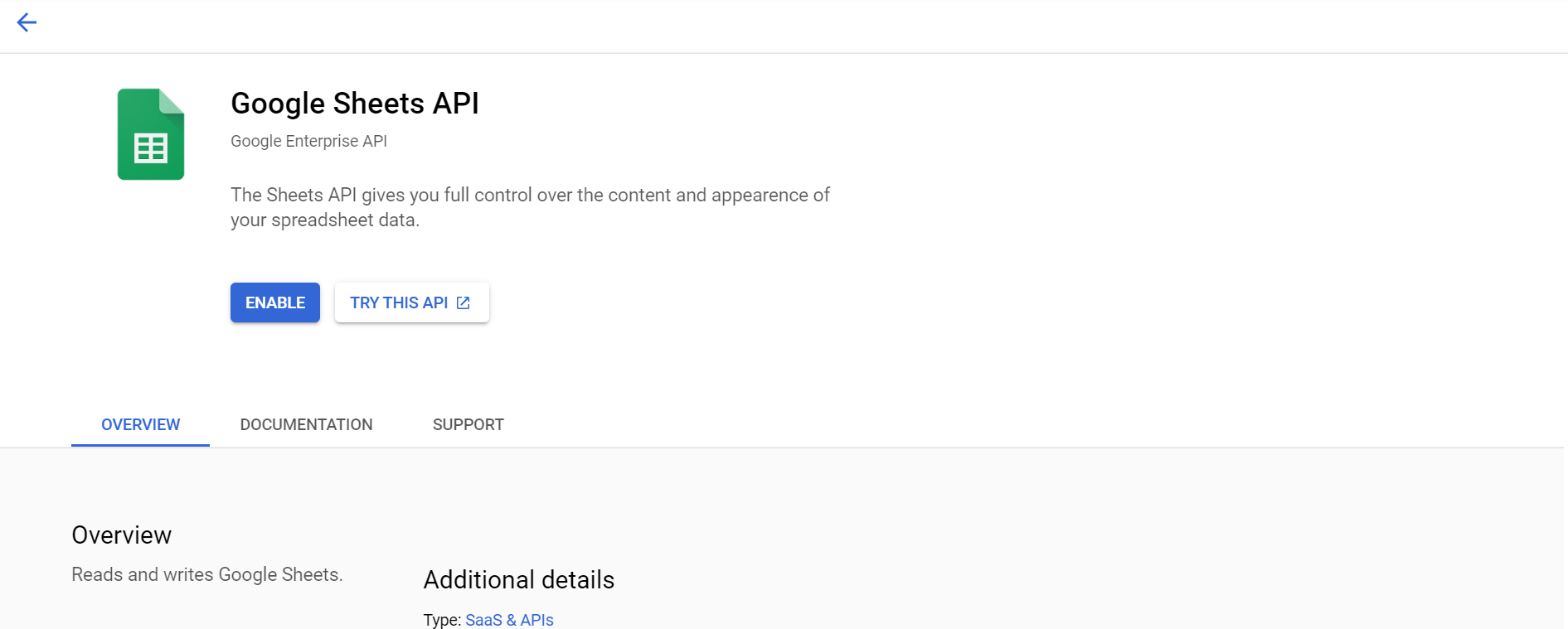

- For example, to enable the Google Sheets API:

- Search ‘Google Sheets API’ using the top banner search box

- Scroll down and select the option with the API logo

- Click the Enable button

Search Sheets API

Enable Sheets API

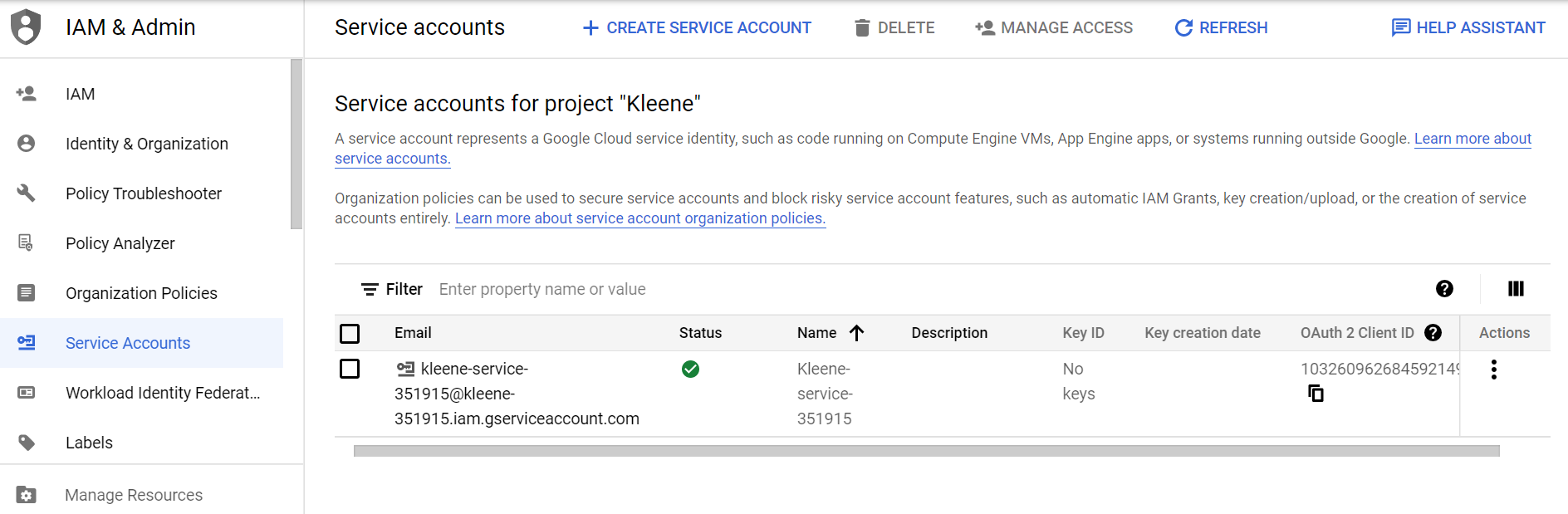

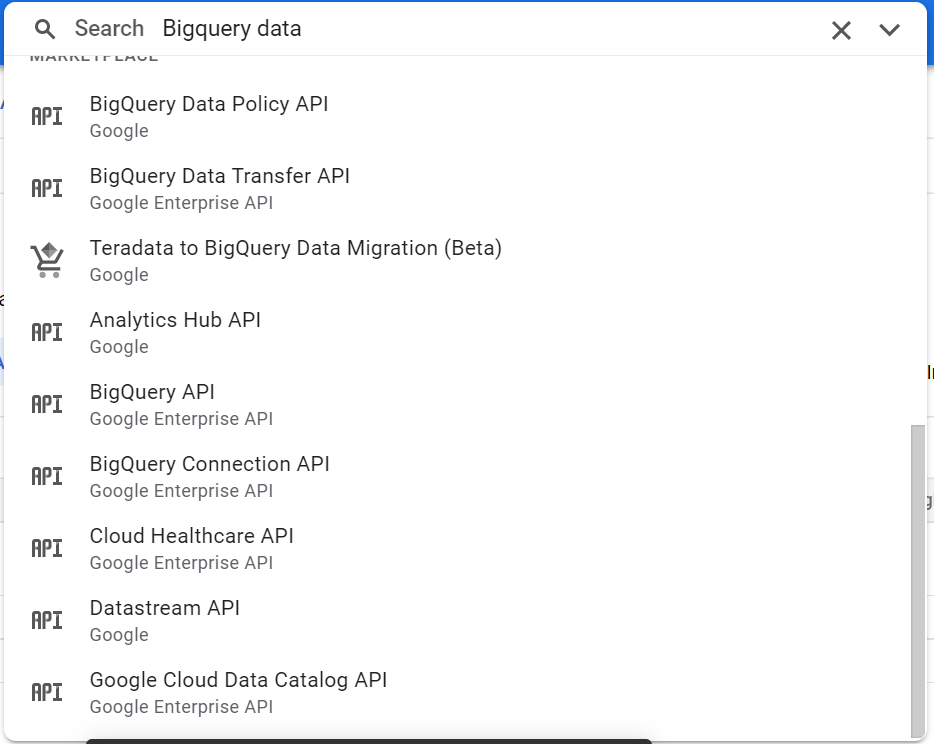

- You now need to generate service account credentials. Navigate to IAM & Admin > Service Accounts.

Click the create service account button and follow the steps through to create a service account. Give the account an appropriate name such as kleene_service.

Once you have generated the account, grant owner access to the project you set up above. You can also grant specific users access to this service account for monitoring purposes, however this is optional.

Once you have created the account, click on Actions > Manage Keys > Add Key > Create New Key

Select JSON and confirm. Save this text somewhere secure as it cannot be redownloaded.

Service account created

WarningYou can only ever create service account credentials once so when you generate them, remember to save them in a secure password safe / vault!

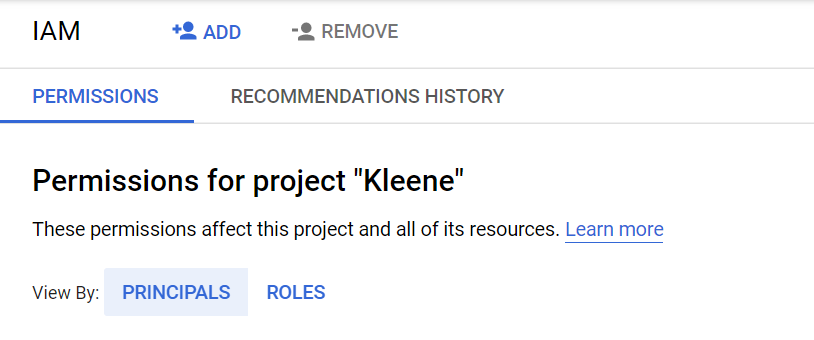

- Next navigate to IAM & Admin > IAM and click Add.

Add the client email (which you can find in the service account key file you downloaded a moment ago) and select the role as Owner.

Add IAM permissions

- A bucket now needs to be created to land data in the Kleene App. On the main menu, navigate to Cloud Storage.

Main Menu > Cloud Storage

Create a new bucket and give it a unique name such as kleene_bucket-1 (naming requirements). Set the location (single region) so that it matches the location of the data set you're transferring (Make sure the location is consistent with all the other locations you have set).

For Choose a default storage class for your data, select a storage class for the bucket. The default storage class is assigned by default to all objects uploaded to the bucket. We recommend using Standard.

Note:The Monthly cost estimate panel in the right pane estimates the bucket's monthly costs based on your selected storage class and location, as well as your expected data size and operations.

For Choose how to control access to objects, select an Access control option. Given that our app sits outside the Google network, here you'll need to make the bucket public by selected fine-grained access.

For Choose how to protect object data, select none and click the create bucket.

Note:Don’t worry if you can’t see data landing in the bucket you have created. You will see folders appear once you have set up and run extracts in the Kleene App.

That is all the core GCP setup complete, you are now ready to continue with the specific set up guides for each Google product (GA4, UA, Ads).

Additional Steps for Setting up GA4:

- To land GA4 data in your data warehouse, you have to first land it in BigQuery and then it can be pulled from there into your Snowflake or Redshift data warehouse.

The data can be transferred into BigQuery using the free BigQuery Data Transfer Service which allows data to land once per day (data streaming unavailable without high costs). - This service needs to be enabled like APIs you previously enabled. Search for BigQuery Data Transfer API and enable it. Refer to the image above.

Quick tip:Common issues with landing Google data in the warehouse are often caused by an API not being enabled. Always try searching for what you are attempting to do followed by ‘API’ and making sure they are all enabled!

Search BigQuery Data Transfer API

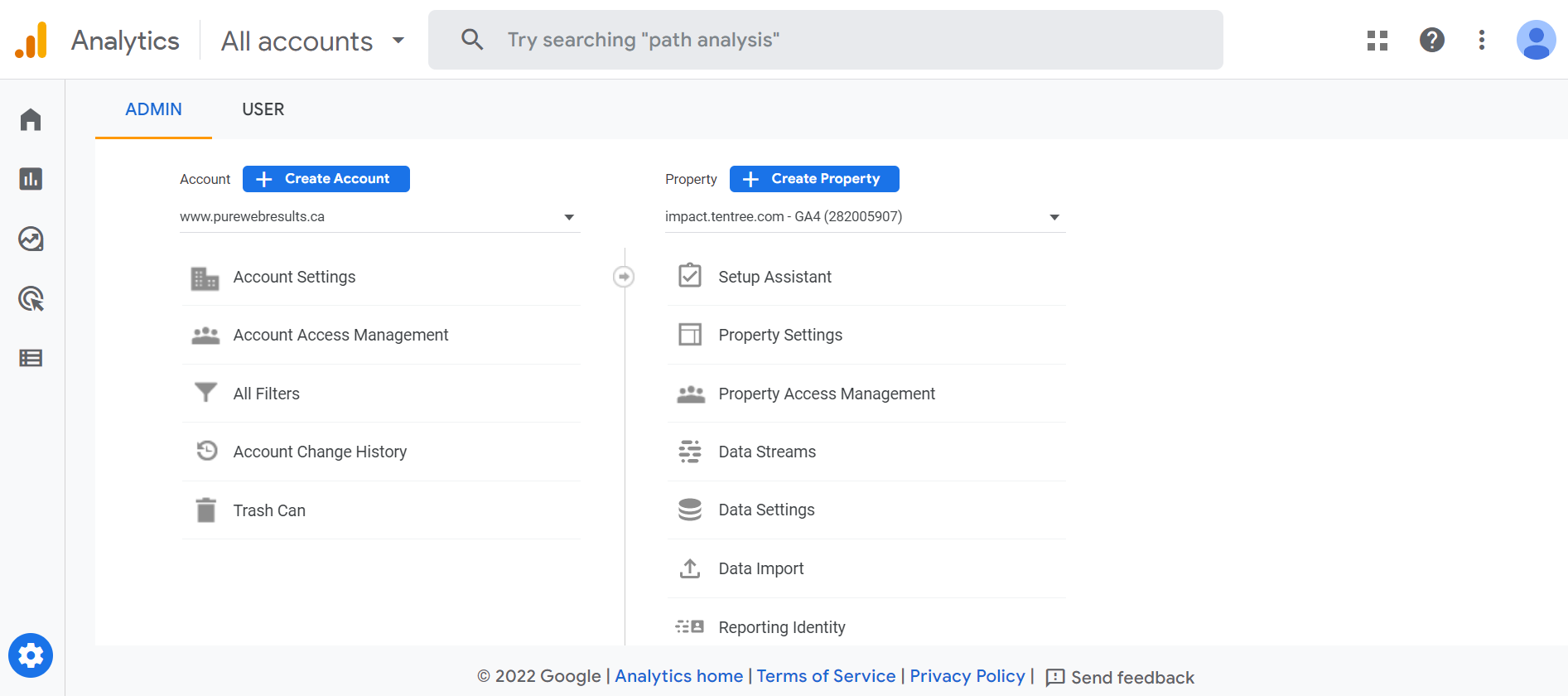

- Head to your Google Analytics frontend and make sure you have selected the correct GA4 instance that you wish to pull data from.

Click on the admin cog which is in the bottom left of the page and navigate to Account Access Management.

Account Access Management

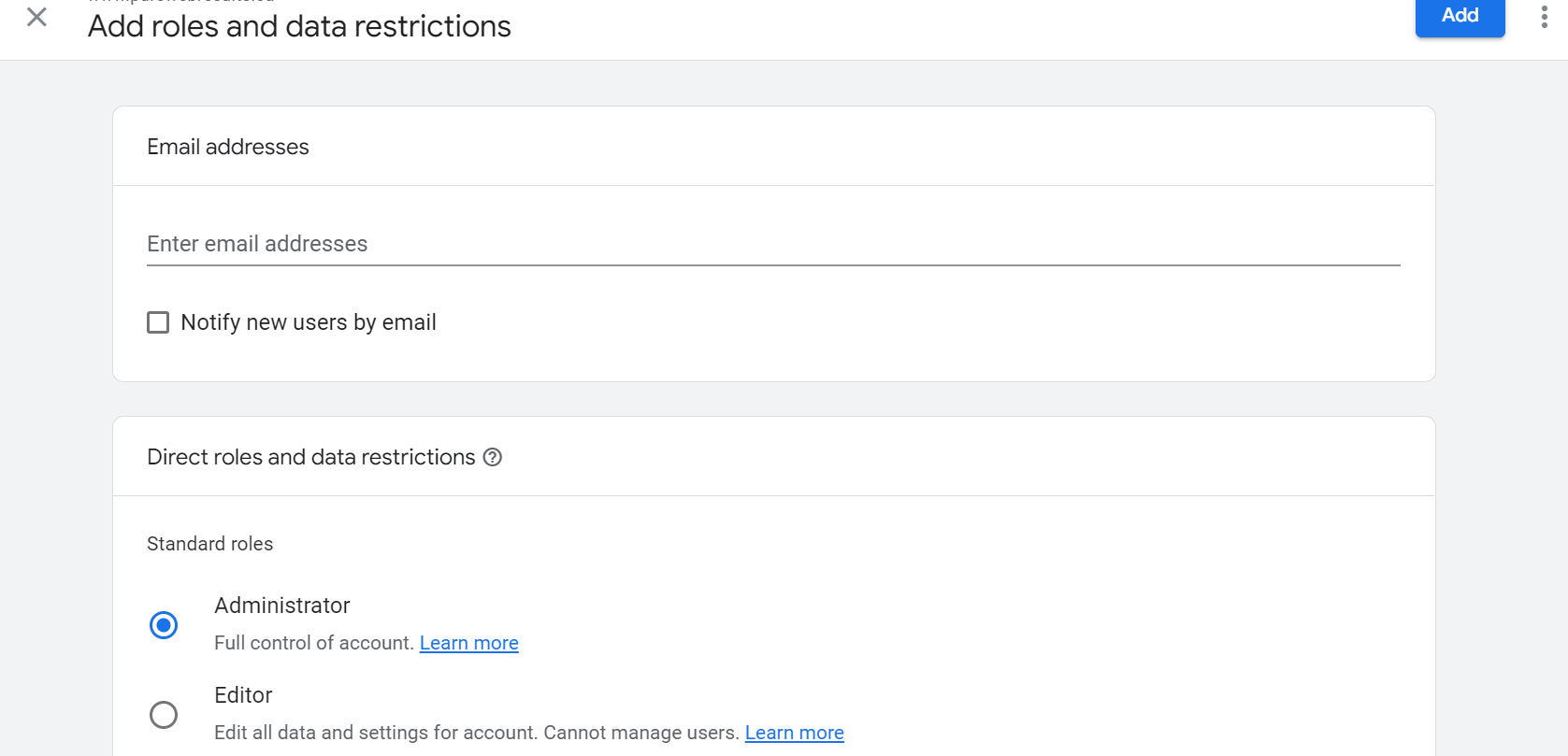

- Add the same service account email (from the JSON file you previously downloaded) as a user to the Analytics instance and select the Administrator role.

Add Service Account Email

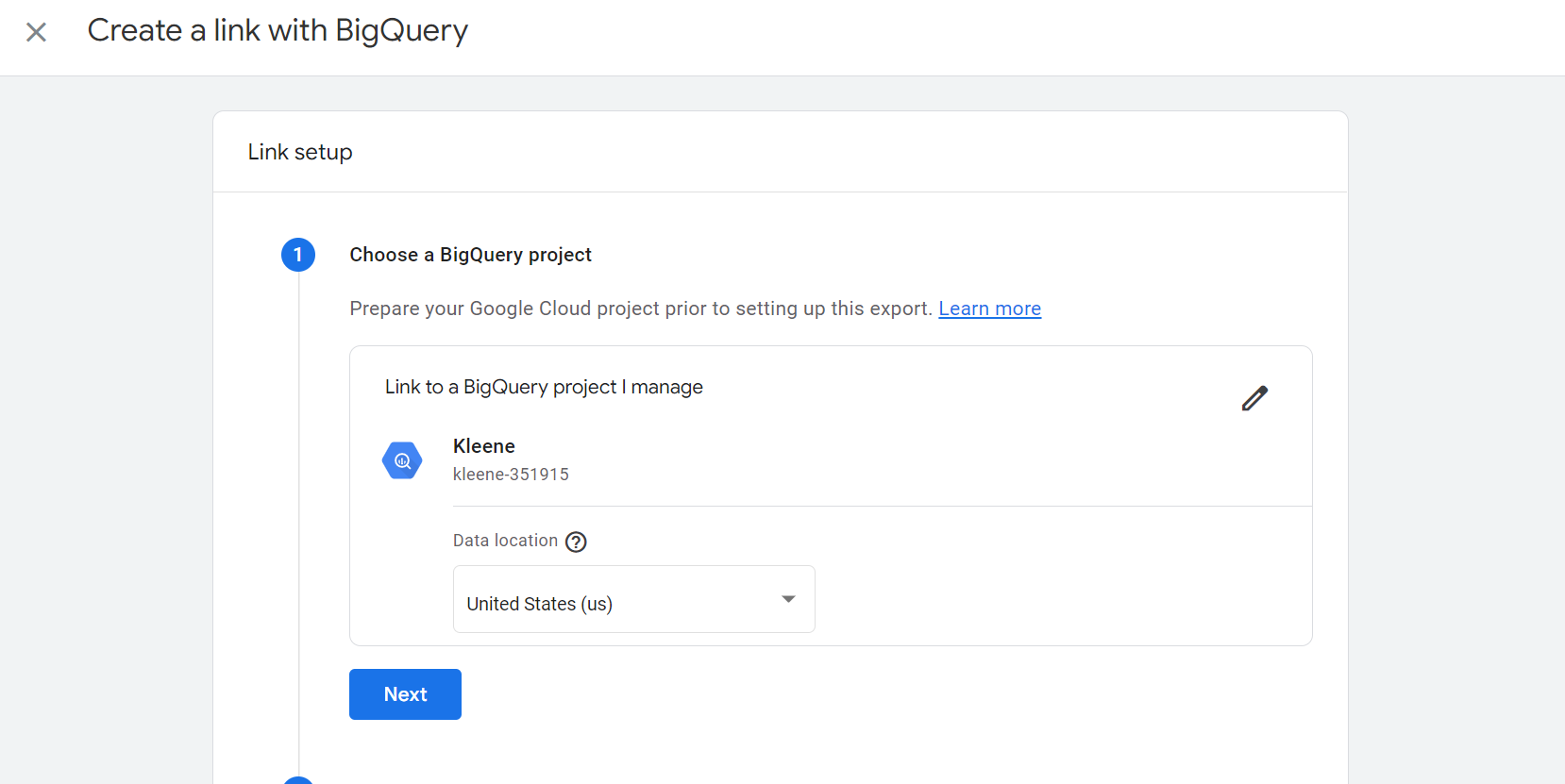

- Scroll down the list in the admin panel and select BigQuery Links: Create a new link and select the project id you have set up, the appropriate region (this region MUST match the region of the GCS bucket you created earlier in the GCP setup section) and set the frequency to daily.

Link BigQuery project

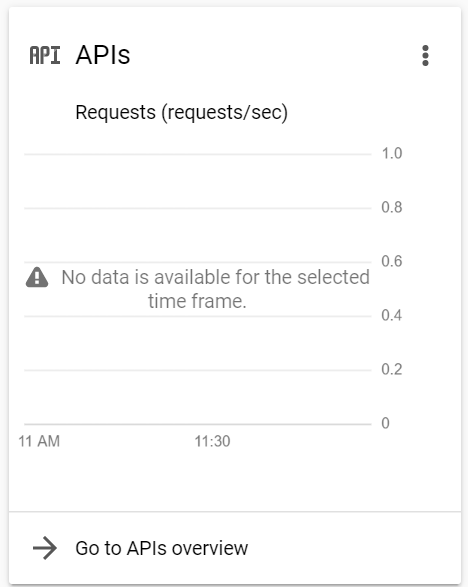

- It will take up to 24 hours for data to land in BigQuery so you now need to wait for this. On the GCP homepage dashboard, you will see this graph:

Once data has landed, a line plot will appear here 🔽

GCP Homepage graph

- After landing the data in BigQuery, it now needs to be flattened and transformed so that it’s in the correct format to ingest into your data warehouse. You may not need all of the tables here so select and use the ones you need.

In order to ensure each extract runs successfully in the Kleene app, we run each one daily pulling data for the latest available day and appending it to the ingest table in your data warehouse. If you have been transferring Analytics to BigQuery for a while and have built up a large volume of data that needs to be ingested, we recommend running a backfill / multiple backfills first.

Using Flat Events backfill table as example, copy the script below:

--flat_events

select

(select value.int_value from unnest(event_params) where key = 'ga_session_id') as ga_session_id,

event_date,

event_timestamp,

event_name,

event_previous_timestamp,

event_value_in_usd,

event_bundle_sequence_id,

event_server_timestamp_offset,

user_id,

user_pseudo_id,

user_first_touch_timestamp,

user_ltv.revenue as user_ltv_revenue,

user_ltv.currency as user_ltv_currency,

device.category,

device.mobile_brand_name,

device.mobile_model_name,

device.mobile_marketing_name,

device.mobile_os_hardware_model,

device.operating_system,

device.operating_system_version,

device.vendor_id,

device.advertising_id,

device.language,

device.is_limited_ad_tracking,

device.time_zone_offset_seconds,

device.browser as device_browser,

device.browser_version as device_browser_version,

device.web_info.browser as device_web_info_browser,

device.web_info.browser_version as device_web_info_browser_version,

device.web_info.hostname as device_web_info_hostname,

geo.continent,

geo.country,

geo.region,

geo.city,

geo.sub_continent,

geo.metro,

app_info.id,

app_info.version,

app_info.install_store,

app_info.firebase_app_id,

app_info.install_source,

traffic_source.name,

traffic_source.medium,

traffic_source.source,

stream_id,

platform,

event_dimensions.hostname as event_dimensions_hostname,

ecommerce.total_item_quantity,

ecommerce.purchase_revenue_in_usd,

ecommerce.purchase_revenue,

ecommerce.refund_value_in_usd,

ecommerce.refund_value,

ecommerce.shipping_value_in_usd,

ecommerce.shipping_value,

ecommerce.tax_value_in_usd,

ecommerce.tax_value,

ecommerce.unique_items,

ecommerce.transaction_id,

concat(user_pseudo_id,event_timestamp,event_name,row_number() over(partition by user_pseudo_id, event_timestamp, event_name)) as join_key

from

-- change this to your google analytics 4 export location in bigquery

`client.analytics_271458848.events_*`

where

-- define static and/or dynamic start and end date

_table_suffix between '20210101'

and format_date('%Y%m%d',date_sub(current_date(), interval 1 day))--flat_event_parameters

select

max(ga_session_id) over (partition by event_timestamp,event_name) as ga_session_id,

concat(user_pseudo_id,event_timestamp,event_name,dedup_id) as join_key,

* except(ga_session_id)

from (

select

user_pseudo_id,

case when event_params.key = 'ga_session_id' then event_params.value.int_value else null end as ga_session_id,

event_timestamp,

event_name,

event_params.key,

event_params.value.string_value,

event_params.value.int_value,

event_params.value.float_value,

event_params.value.double_value,

dedup_id

from (

select

row_number() over(partition by user_pseudo_id, event_timestamp, event_name) as dedup_id,

*

from

-- change this to your google analytics 4 export location in bigquery

`client.analytics_220631779.events_*`

where

-- define static and/or dynamic start and end date

_table_suffix between '20210101' and format_date('%Y%m%d',date_sub(current_date(), interval 1 day))),

unnest(event_params) as event_params)--flat_user_properties

select

user_pseudo_id,

event_timestamp,

event_name,

user_properties.key,

user_properties.value.string_value,

user_properties.value.int_value,

user_properties.value.float_value,

user_properties.value.double_value,

user_properties.value.set_timestamp_micros,

concat(user_pseudo_id,event_timestamp,event_name,dedup_id) as join_key

from (

select

row_number() over(partition by user_pseudo_id, event_timestamp, event_name) as dedup_id,

*

from

-- change this to your google analytics 4 export location in bigquery

`client.analytics_220631479.events_*`

where

-- define static and/or dynamic start and end date

_table_suffix between '20210101' and format_date('%Y%m%d',date_sub(current_date(), interval 1 day))),

unnest(user_properties) as user_properties--flat_items

select

user_pseudo_id,

event_timestamp,

event_name,

items.item_id,

items.item_name,

items.item_brand,

items.item_variant,

items.item_category,

items.item_category2,

items.item_category3,

items.item_category4,

items.item_category5,

items.price_in_usd,

items.price,

items.quantity,

items.item_revenue_in_usd,

items.item_revenue,

items.item_refund_in_usd,

items.item_refund,

items.coupon,

items.affiliation,

items.location_id,

items.item_list_id,

items.item_list_name,

items.item_list_index,

items.promotion_id,

items.promotion_name,

items.creative_name,

items.creative_slot,

concat(user_pseudo_id,event_timestamp,event_name,dedup_id) as join_key

from (

select

row_number() over(partition by user_pseudo_id, event_timestamp, event_name) as dedup_id,

*

from

-- change this to your google analytics 4 export location in bigquery

`client.analytics_220631479.events_*`

where

-- define static and/or dynamic start and end date

_table_suffix between '20210101' and format_date('%Y%m%d',date_sub(current_date(), interval 1 day))),

unnest(items) as itemsselect

max(ga_session_id) over (partition by event_timestamp,event_name) as ga_session_id,

concat(user_pseudo_id,event_timestamp,event_name,dedup_id) as join_key,

* except(ga_session_id)

from (

select

user_pseudo_id,

case when event_params.key = 'ga_session_id' then event_params.value.int_value else null end as ga_session_id,

event_timestamp,

event_name,

privacy_info.analytics_storage,

privacy_info.ads_storage,

privacy_info.uses_transient_token,

dedup_id

from (

select

row_number() over(partition by user_pseudo_id, event_timestamp, event_name) as dedup_id,

*

from

-- change this to your google analytics 4 export location in bigquery

`client.analytics_220631479.events_*`

where

-- define static and/or dynamic start and end date

_table_suffix between '20210101' and format_date('%Y%m%d',date_sub(current_date(), interval 1 day))),

--unnest(privacy_info) as privacy_info)

unnest(event_params) as event_params)For each one, add to the top of the script (adjusting for each name):

create or replace table flat_events_backfill asEdit the client and analytics dataset parts of the script in here:

FROM

-- change this to your google analytics 4 export location in bigquery

`client.analytics_271458848.events_*`

WHERE

-- define static and/or dynamic start and end date_table_suffix

between '20210101'

and format_date('%Y%m%d',date_sub(current_date(), interval 1 day))Now looking at the Flat Event Parameters backfill script

Add to the top of the script:

create or replace table flat_event_parameters_backfill asEdit the client and analytics dataset parts of the script in here:

FROM

-- change this to your google analytics 4 export location in bigquery

`client.analytics_271458848.events_*`

WHERE

-- define static and/or dynamic start and end date

between '20210101'

and format_date('%Y%m%d',date_sub(current_date(), interval 1 day))The above needs to be followed for Tables: Flat User Properties, Flat Items, Flat User Privacy Info which can all be found in the embedded scripts mentioned above.

You might not have data for these tables so you might not require them.

To create the yesterday tables (which will be set to ingest daily), the yesterday scripts for each of the tables need to be run similar to above, however we also need to schedule the scripts so they run daily. These scripts can be found below:

We will run through the yesterday script for Flat Events below in steps 8-12 but they all follow the same setup process.

- Find the Yesterday Flat Events script:

--flat events

select

(select value.int_value from unnest(event_params) where key = 'ga_session_id') as ga_session_id,

event_date,

event_timestamp,

event_name,

event_previous_timestamp,

event_value_in_usd,

event_bundle_sequence_id,

event_server_timestamp_offset,

user_id,

user_pseudo_id,

user_first_touch_timestamp,

user_ltv.revenue as user_ltv_revenue,

user_ltv.currency as user_ltv_currency,

device.category,

device.mobile_brand_name,

device.mobile_model_name,

device.mobile_marketing_name,

device.mobile_os_hardware_model,

device.operating_system,

device.operating_system_version,

device.vendor_id,

device.advertising_id,

device.language,

device.is_limited_ad_tracking,

device.time_zone_offset_seconds,

device.browser as device_browser,

device.browser_version as device_browser_version,

device.web_info.browser as device_web_info_browser,

device.web_info.browser_version as device_web_info_browser_version,

device.web_info.hostname as device_web_info_hostname,

geo.continent,

geo.country,

geo.region,

geo.city,

geo.sub_continent,

geo.metro,

app_info.id,

app_info.version,

app_info.install_store,

app_info.firebase_app_id,

app_info.install_source,

traffic_source.name,

traffic_source.medium,

traffic_source.source,

stream_id,

platform,

event_dimensions.hostname as event_dimensions_hostname,

ecommerce.total_item_quantity,

ecommerce.purchase_revenue_in_usd,

ecommerce.purchase_revenue,

ecommerce.refund_value_in_usd,

ecommerce.refund_value,

ecommerce.shipping_value_in_usd,

ecommerce.shipping_value,

ecommerce.tax_value_in_usd,

ecommerce.tax_value,

ecommerce.unique_items,

ecommerce.transaction_id,

concat(user_pseudo_id,event_timestamp,event_name,row_number() over(partition by user_pseudo_id, event_timestamp, event_name)) as join_key

from

-- change this to your google analytics 4 export location in bigquery

`client.analytics_271458848.events_*`

where

-- define static and/or dynamic start and end date

_table_suffix between format_date('%Y%m%d',date_sub(current_date(), interval 2 day))

and format_date('%Y%m%d',date_sub(current_date(), interval 1 day))--flat_event_parameters

select

max(ga_session_id) over (partition by event_timestamp,event_name) as ga_session_id,

concat(user_pseudo_id,event_timestamp,event_name,dedup_id) as join_key,

* except(ga_session_id)

from (

select

user_pseudo_id,

case when event_params.key = 'ga_session_id' then event_params.value.int_value else null end as ga_session_id,

event_timestamp,

event_name,

event_params.key,

event_params.value.string_value,

event_params.value.int_value,

event_params.value.float_value,

event_params.value.double_value,

dedup_id

from (

select

row_number() over(partition by user_pseudo_id, event_timestamp, event_name) as dedup_id,

*

from

-- change this to your google analytics 4 export location in bigquery

`client.analytics_220631479.events_*`

where

-- define static and/or dynamic start and end date

_table_suffix between format_date('%Y%m%d',date_sub(current_date(), interval 2 day)) and format_date('%Y%m%d',date_sub(current_date(), interval 1 day))),

unnest(event_params) as event_params)--flat_user_properties

select

user_pseudo_id,

event_timestamp,

event_name,

user_properties.key,

user_properties.value.string_value,

user_properties.value.int_value,

user_properties.value.float_value,

user_properties.value.double_value,

user_properties.value.set_timestamp_micros,

concat(user_pseudo_id,event_timestamp,event_name,dedup_id) as join_key

from (

select

row_number() over(partition by user_pseudo_id, event_timestamp, event_name) as dedup_id,

*

from

-- change this to your google analytics 4 export location in bigquery

`client.analytics_220631479.events_*`

where

-- define static and/or dynamic start and end date

_table_suffix between format_date('%Y%m%d',date_sub(current_date(), interval 2 day)) and format_date('%Y%m%d',date_sub(current_date(), interval 1 day))),

unnest(user_properties) as user_properties--flat_items

select

user_pseudo_id,

event_timestamp,

event_name,

items.item_id,

items.item_name,

items.item_brand,

items.item_variant,

items.item_category,

items.item_category2,

items.item_category3,

items.item_category4,

items.item_category5,

items.price_in_usd,

items.price,

items.quantity,

items.item_revenue_in_usd,

items.item_revenue,

items.item_refund_in_usd,

items.item_refund,

items.coupon,

items.affiliation,

items.location_id,

items.item_list_id,

items.item_list_name,

items.item_list_index,

items.promotion_id,

items.promotion_name,

items.creative_name,

items.creative_slot,

concat(user_pseudo_id,event_timestamp,event_name,dedup_id) as join_key

from (

select

row_number() over(partition by user_pseudo_id, event_timestamp, event_name) as dedup_id,

*

from

-- change this to your google analytics 4 export location in bigquery

`client.analytics_220631479.events_*`

where

-- define static and/or dynamic start and end date

_table_suffix between format_date('%Y%m%d',date_sub(current_date(), interval 2 day)) and format_date('%Y%m%d',date_sub(current_date(), interval 1 day)),

unnest(items) as itemsselect

max(ga_session_id) over (partition by event_timestamp,event_name) as ga_session_id,

concat(user_pseudo_id,event_timestamp,event_name,dedup_id) as join_key,

* except(ga_session_id)

from (

select

user_pseudo_id,

case when event_params.key = 'ga_session_id' then event_params.value.int_value else null end as ga_session_id,

event_timestamp,

event_name,

privacy_info.analytics_storage,

privacy_info.ads_storage,

privacy_info.uses_transient_token,

dedup_id

from (

select

row_number() over(partition by user_pseudo_id, event_timestamp, event_name) as dedup_id,

*

from

-- change this to your google analytics 4 export location in bigquery

`client.analytics_220631479.events_*`

where

-- define static and/or dynamic start and end date

_table_suffix between format_date('%Y%m%d',date_sub(current_date(), interval 2 day)) and format_date('%Y%m%d',date_sub(current_date(), interval 1 day))),

--unnest(privacy_info) as privacy_info)

unnest(event_params) as event_params)Nothing needs to be added to the top of the script this time but you do need to edit the highlighted section like above:

client.analytics_271458848.events_*

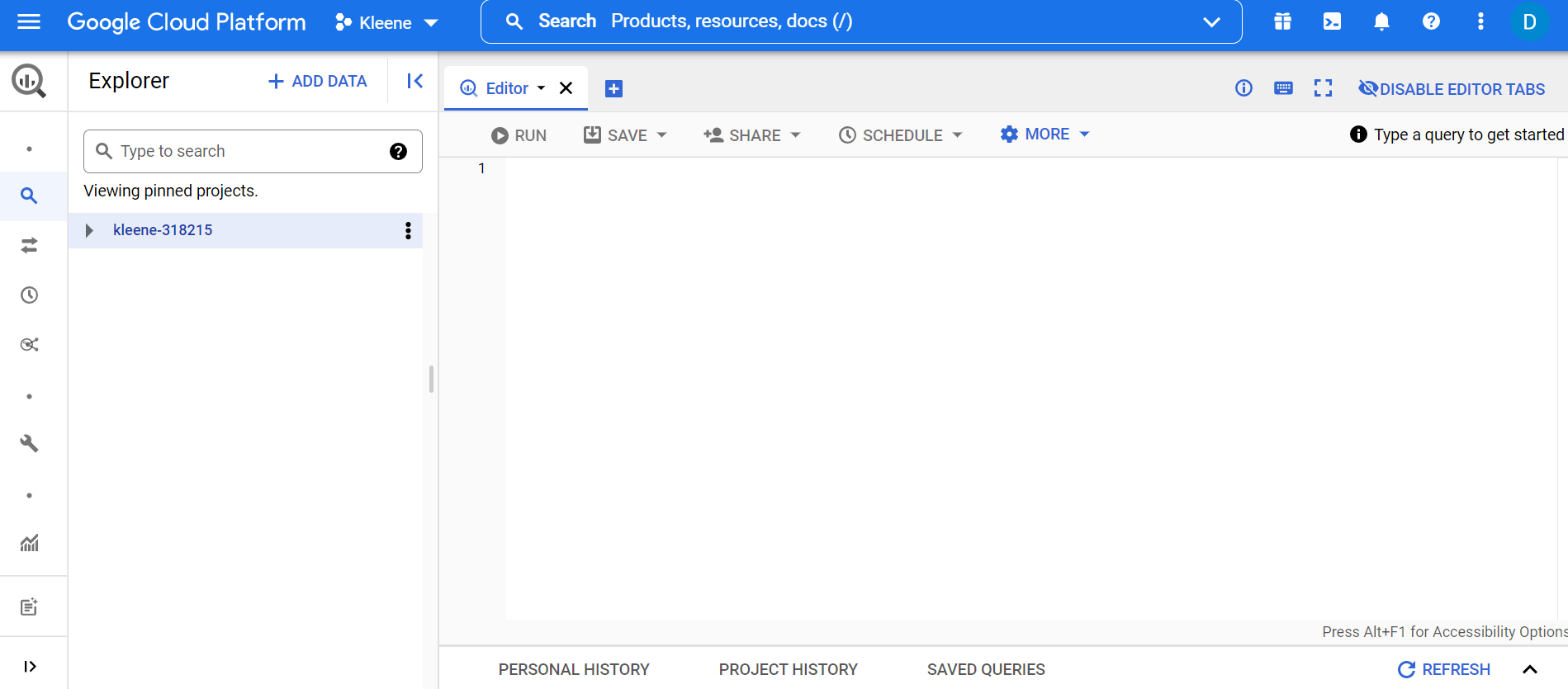

- Copy and paste this into the BigQuery Console within your GCP instance:

BigQuery Console

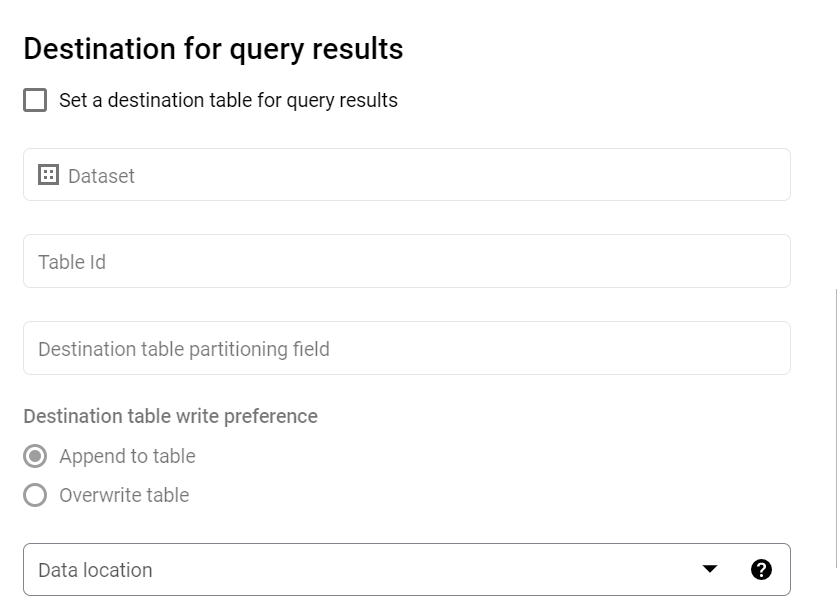

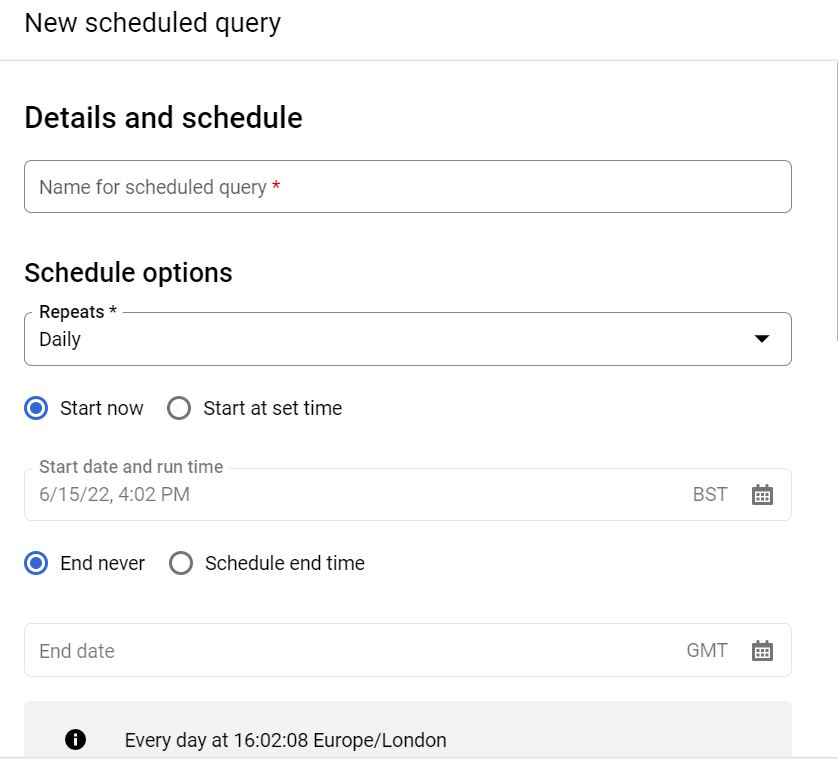

- Click Schedule > Create new scheduled query

Select an appropriate name (eg. flat_events_yesterday_overwrite) and set the schedule as daily with an appropriate start time (we usually set this between 1100 - 1400 GMT).

Note:The time Google lands data each day can vary so set the schedule with this in mind.

Select the dataset that the table you are querying is in and set the table id as the name of the table that the query is going to write to (e.g. flat_events_yesterday). Leave the Destination table Partitioning Field blank.

Set the write preference to overwrite and the data location as the same location as your GCS bucket that you set up in the GCP setup guide.

Destination Query Results

Set up scheduled query

Setting up the BigQuery Connector in the Kleene App:

The BigQuery connector can be used to land GA4 data, Ads data, YouTube data etc.

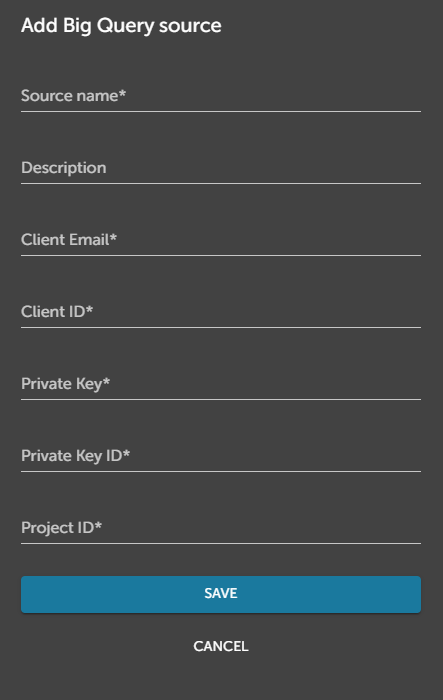

Add BigQuery Source

These fields can all be populated from the matching fields detailed in the key files you generated and downloaded at the beginning of this guide (the service account key credentials). The source name can be anything e.g. BigQuery Analytics Source

Note:The private key MUST include everything within the speech marks.

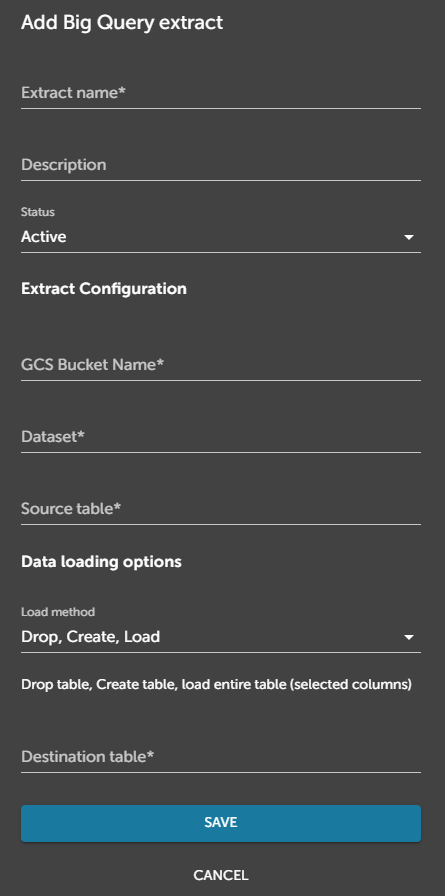

Setting up a BigQuery Connector Extract:

Using the scripts, detailed above, you will have created a flattened backfill table for the GA4 events data and a flattened events table for the previous day which will get overwritten daily (and the same event_parameters, user_properties, items etc).

You will now need to set-up an unscheduled extract to land the backfill table once (Drop, Create, Load) and a daily scheduled extract to land the events table for yesterday (we recommend setting this up as Append and then creating a transform to delete from the ingest table once the data has been successfully carried downstream).

Add the GCS bucket name, dataset (which contains the table you are ingesting e.g. analytics_XXXXXXXXX) and the source table (the table you are ingesting e.g. flat_events_yesterday). Click Save and run.

If you are landing analytics data in Big Query for the first time, you will only be able to have a backfill of 1 day so you can schedule a daily pull of the flattened events yesterday table and skip the backfill table creation.

Add BigQuery extract

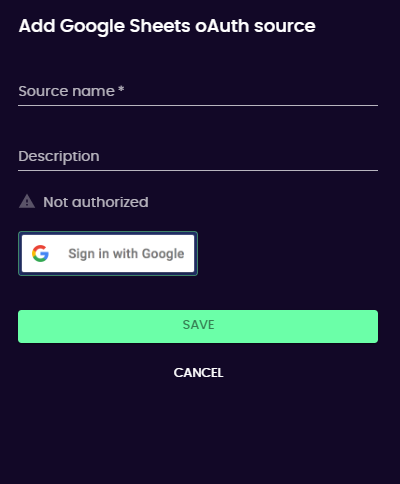

Setting up Google Sheets:

- Setting up the Google Sheets oAuth source is as simple as signing in with Google and saving the config:

Add GSheets Source

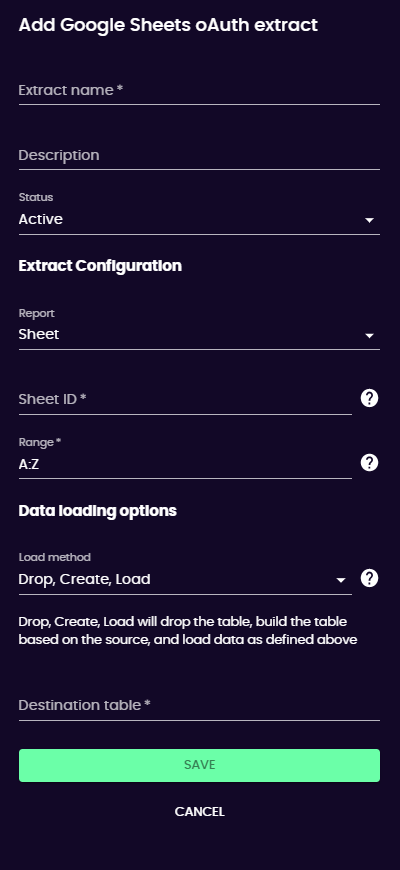

- Then adding an extract requires filling in these details using the docs as a guide:

Add GSheets extract

Updated 3 months ago