Practical Worked Examples

This section provides practical, worked examples to help you understand and implement data deduplication techniques. We'll cover establishing primary keys, basic deduplication methods, handling snapshots, and dealing with Change Data Capture (CDC) streams.

Establishing the Primary Key

Establishing what the primary key is or should be is an important first step in data deduplication because it determines what uniquely identifies each record in a dataset. You will use this to work with as you subsequently process your data.

Compound Keys

In many cases, a single column is insufficient to uniquely identify records, necessitating the use of compound keys. Compound keys are when we concatenate several columns, which collectively define the uniqueness of the row record-set, into a new column which become the primary key.

Minimize Columns:

While using compound keys, aim to use the minimal number of columns necessary to uniquely identify records. This reduces complexity and improves performance.

Introduce a separating character to avoid colliding strings

When constructing compound keys by concatenating multiple columns, introducing a separating character (e.g., a hyphen or pipe) between column values is crucial. This practice prevents string collisions where different combinations of values might otherwise produce identical concatenated results.

Example:

Without Separator:

CONCAT('123', '45') produces '12345'

CONCAT('12', '345') also produces '12345'

With Separator:

CONCAT('123', '-', '45') produces '123-45'

CONCAT('12', '-', '345') produces '12-345'

By using a separating character, you ensure that each concatenated string remains unique and accurately represents the combination of its constituent columns, avoiding potential key collisions.

Handle Nulls:

Ensure that the columns you choose for your primary key can handle null values appropriately. Null values can disrupt the uniqueness of your key.

Hashing Compound Keys for Neatness and Conciseness:

You can use hashing (e.g., MD5) to create a unique identifier from multiple columns. This approach is particularly useful for neatness and simplicity.

Example Query:

SELECT *, MD5(CONCAT(column1, column2, column3)) AS compound_key FROM your_table;

Basic Deduplication

To perform basic deduplication, identify duplicate records and retain only one instance of each.

Example Query:

WITH ranked_records AS ( SELECT *, ROW_NUMBER() OVER (PARTITION BY unique_key_column ORDER BY load_timestamp DESC) AS rn FROM your_table ) DELETE FROM your_table WHERE unique_key_column IN ( SELECT unique_key_column FROM ranked_records WHERE rn > 1 );

Snapshots

Full-Append Load Strategy

The simplest way to manage snapshots is by using a full-append load strategy. This involves appending new records to the existing dataset often by selecting the "Append" insert and "Full" load options in the relevant kleene connector. You will find that _kleene_extract_date fields will indicate the separation between record loads.

Storage-Constrained Snapshots

If storage is a concern in snapshot tables, which can often be the case as this can be a considerable volume of data to retain, and you only want to keep changes, you need to construct a primary key at the new level of granularity and identify changed records.

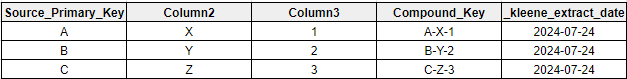

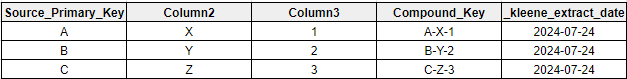

Step 1: Create a Primary Key

Construct a primary key (Compound Key) at the new level of granularity.

Insert this to a new table this will be your historical record going forward

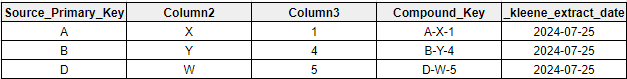

Step 2: Identify Changed Records

Compare new snapshot with historical record.

Historical Record:

New Snapshot:

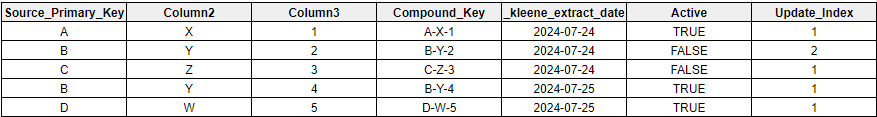

SQL Steps:

- Remove compound key full duplicates.

- Append data into new table.

- Ensure rows which no longer exist in the snapshot are tagged inactive

- Create a row_number window function index, partitioned by the source primary key and order by the _kleene_extract_date descending. This gives you the value 1 for the most recent record for each source key allowing you to easily select current state.

Changed Records:

CDC

Coming soon

Updated 3 months ago