Quality Assurance (QA)

Quality assurance (QA) is of the utmost importance when delivering work. QA can prevent disappointing launches and ensure standards meet what has been scoped. This is even more so the case with data. If data has not been subjected to QA, then the users will be at risk of making potentially fatal decisions based on bad data.

Additional reasons why QA is essential include:

- Increases output efficiency, reduces duplication of work and increases repeatability in the future

- Improves reactivity to data issues through surfacing of unit tests through a unit tests dashboard

- Maintains stakeholder trust and client relations

- Increases analyst confidence in outputs, allowing for faster ways of working

Although bedding in a QA process can take time, the dividends on the other end (as seen above) can pay-off very well, we can all do a little bit to help ourselves a lot in the short-term, and make iterative changes to help holistically in the long-term.

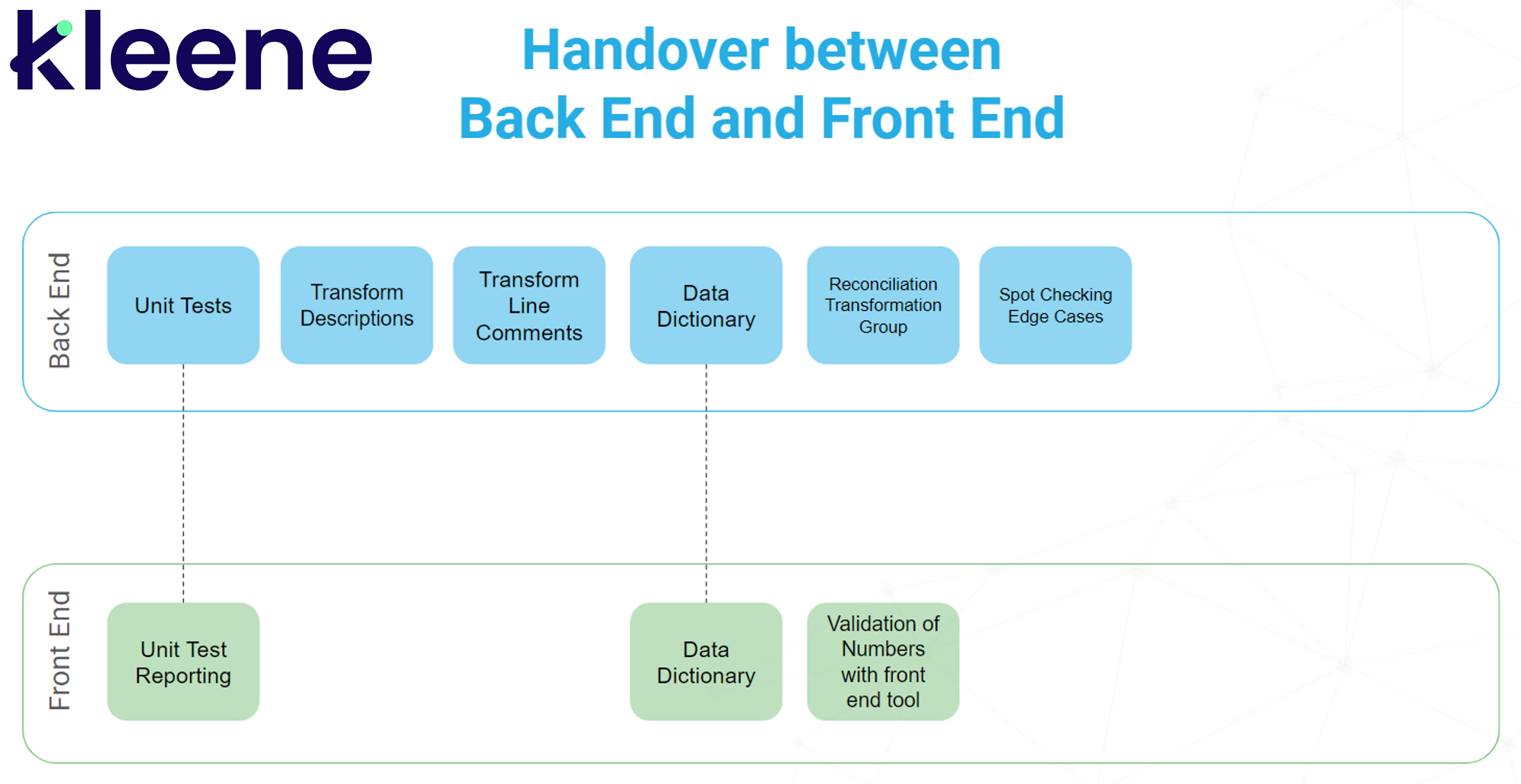

What can be done to ensure a smooth peer-review / internal QA process between a analytics engineering team (backend) and an insights team (frontend).

There are 4 core facets and 3 sub-facets related within the data enablement internal QA process, which we should all be doing when building a data warehouse. These are as follows:

- Unit tests - See here for our approach and methodology for unit testing, these underpin our overall internal QA process and ensure success of the running of our pipelines.

- Transform descriptions - We need to ensure that wording related to every transform is prevalent within the transform description defining what the aim of the transform is. This should be no more than 2-3 sentences and should give a holistic flavour of the transform, with transform line comments outlining the nitty gritty detail related to a transform.

- Transform line descriptions - There are a couple of different scenarios where line comments should be implemented:

- Line comments for any time discreet logic is being applied to describe what we're doing. Examples include partitioning of data, grouping of data, case statements, etc.

- Line comments on any join and expected cardinality of said join (one-to-one, one-to-many, many-to-many should be avoided and a joining table should be used in this scenario).

- Primary and foreign keys defined, where relevant.

- Naming conventions followed as per the data modelling.

- Reconciliation transformation group - tbc, part of this should be done by static checks via unit testing, but these should enable us to evidence reconciliation to data at a static point in time, for example costs coming through from google ads in 2021 matching up with whatever is stated on the front-end. There should also be some transforms to spot check edge cases embedded in to this group, just to check logic is functioning as expected.

- Data dictionary - tbc - this will be outlined in a following iteration of our internal QA methodology , however there will be standardised scripts to use to pull out column names and formats dependent on when using Redshift or Snowflake, with definitions on calculated columns needing to be defined.

What should I be doing when QAing work?

As a starting place, you should go through the work that has been undertaken related to an outcome and ensure all the (relevant) steps in point 2 have taken place. Check transform line comments make sense and are relevant, spot check cardinality of joins, ensure all naming conventions are followed, that unit tests have been implemented properly and are all passing as standard, and that transform descriptions have been filled in for anything past the _cleaned stage.

Updated 5 months ago